I’ve recently had the opportunity to brush off my SSIS skills and revisit this toolset. In my most recent usage, I had a requirement to use SSIS to pull data from a WCF web service that was a) using the net.tcp protocol, and b) used transport security with a client X.509 certificate for authentication.

This was fun enough by itself. Configuring WCF tend typcially to be non-trival even when you don’t have to tweak app.config files for SQL SSIS services. One of my goals, in fact, was to avoid having to update that, meaning I had to put code in my SSIS Script block in the data flow to configure my channel & security & such.

Luckily, I was able to find examples of doing this with wsHttpBinding’s, so it wasn’t a stretch to tweak it for netTcpBinding with the required changes to support certificate authenticated transport security.

Here’s the code…

using System;usingSystem.Data;usingMicrosoft.SqlServer.Dts.Pipeline.Wrapper;usingMicrosoft.SqlServer.Dts.Runtime.Wrapper;usingSystem.ServiceModel;usingSC_13defb16ae45414dbac17137434aeca0.csproj.PaymentSrv;[Microsoft.SqlServer.Dts.Pipeline.SSISScriptComponentEntryPointAttribute]public class ScriptMain : UserComponent{ChannelFactory<IProfile> channelFactory;IProfileclient;public override voidPreExecute(){base.PreExecute();

boolfireAgain = false;this.ComponentMetaData.FireInformation(0, “Pull From Profile Service.PreExecute”, “Service URI: ‘” + this.Variables.varProfileServiceUrl + “‘”, null, 0, ref fireAgain);this.ComponentMetaData.FireInformation(0, “Pull From Profile Service.PreExecute”, “Cert Fingerprint: ‘” + this.Variables.varClientCertFingerprint + “‘”, null, 0, ref fireAgain);//create the bindingNetTcpBindingbinding = new NetTcpBinding();binding.Security.Mode = SecurityMode.Transport;binding.Security.Transport.ClientCredentialType = TcpClientCredentialType.Certificate;binding.Security.Transport.ProtectionLevel = System.Net.Security.ProtectionLevel.EncryptAndSign;EndpointAddressendpointAddress = new EndpointAddress(this.Variables.varPaymentServiceUrl);channelFactory = new ChannelFactory<IProfile>(binding, endpointAddress);channelFactory.Credentials.ClientCertificate.SetCertificate(System.Security.Cryptography.X509Certificates.StoreLocation.LocalMachine,System.Security.Cryptography.X509Certificates.StoreName.My,System.Security.Cryptography.X509Certificates.X509FindType.FindByThumbprint,this.Variables.varClientCertFingerprint);//” x8 60 66 09 t6 10 60 2d 99 d6 51 f7 5c 3b 25 bt 2e 62 32 79″);channelFactory.Credentials.ServiceCertificate.Authentication.CertificateValidationMode =System.ServiceModel.Security.X509CertificateValidationMode.PeerTrust;//create the channelclient = channelFactory.CreateChannel();IClientChannel channel = (IClientChannel)client;channel.Open();this.ComponentMetaData.FireInformation(0, “Pull From Profile Service.PreExecute”, “Open Succeeded.”, null, 0, reffireAgain);}public override voidPostExecute(){base.PostExecute();//close the channelIClientChannelchannel = (IClientChannel)client;channel.Close();//close the ChannelFactorychannelFactory.Close();}public override voidInput0_ProcessInputRow(Input0Buffer Row){GuidtxGuid = Guid.NewGuid();Profileprofile = null;try{profile = client.getProfile(txGuid, Row.ProfileId);Row.PSProfileType = GetProfileType(profile);}catch (Exception ex){stringmessage = ex.Message();Log(message, 0, null);}}private string GetProfileType(Profileprofile){return “x”;}}

So one of the challenges I encountered while using this method had to do with the client certificate. This error drove me nuts:

The credentials supplied to the package were not recognized.

Server stack trace:

at System.Net.SSPIWrapper.AcquireCredentialsHandle(SSPIInterface SecModule, String package, CredentialUse intent, SecureCredential scc)

at System.Net.Security.SecureChannel.AcquireCredentialsHandle(CredentialUse credUsage, SecureCredential& secureCredential)

at System.Net.Security.SecureChannel.AcquireClientCredentials(Byte[]& thumbPrint)

at System.Net.Security.SecureChannel.GenerateToken(Byte[] input, Int32 offset, Int32 count, Byte[]& output)

at System.Net.Security.SecureChannel.NextMessage(Byte[] incoming, Int32 offset, Int32 count)

at System.Net.Security.SslState.StartSendBlob(Byte[] incoming, Int32 count, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.ProcessReceivedBlob(Byte[] buffer, Int32 count, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.StartReadFrame(Byte[] buffer, Int32 readBytes, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.StartReceiveBlob(Byte[] buffer, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.CheckCompletionBeforeNextReceive(ProtocolToken message, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.StartSendBlob(Byte[] incoming, Int32 count, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.ForceAuthentication(Boolean receiveFirst, Byte[] buffer, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.ProcessAuthentication(LazyAsyncResult lazyResult)

at System.Net.Security.SslStream.AuthenticateAsClient(String targetHost, X509CertificateCollection clientCertificates, SslProtocols enabledSslProtocols, Boolean checkCertificateRevocation)

at System.ServiceModel.Channels.SslStreamSecurityUpgradeInitiator.OnInitiateUpgrade(Stream stream, SecurityMessageProperty& remoteSecurity)

at System.ServiceModel.Channels.StreamSecurityUpgradeInitiatorBase.InitiateUpgrade(Stream stream)

at System.ServiceModel.Channels.ConnectionUpgradeHelper.InitiateUpgrade(StreamUpgradeInitiator upgradeInitiator, IConnection& connection, ClientFramingDecoder decoder, IDefaultCommunicationTimeouts defaultTimeouts, TimeoutHelper& timeoutHelper)

at System.ServiceModel.Channels.ClientFramingDuplexSessionChannel.SendPreamble(IConnection connection, ArraySegment`1 preamble, TimeoutHelper& timeoutHelper)

at System.ServiceModel.Channels.ClientFramingDuplexSessionChannel.DuplexConnectionPoolHelper.AcceptPooledConnection(IConnection connection, TimeoutHelper& timeoutHelper)

at System.ServiceModel.Channels.ConnectionPoolHelper.EstablishConnection(TimeSpan timeout)

at System.ServiceModel.Channels.ClientFramingDuplexSessionChannel.OnOpen(TimeSpan timeout)

at System.ServiceModel.Channels.CommunicationObject.Open(TimeSpan timeout)

at System.ServiceModel.Channels.ServiceChannel.OnOpen(TimeSpan timeout)

at System.ServiceModel.Channels.CommunicationObject.Open(TimeSpan timeout)

at System.ServiceModel.Channels.CommunicationObject.Open()

Exception rethrown at [0]:

at System.Runtime.Remoting.Proxies.RealProxy.HandleReturnMessage(IMessage reqMsg, IMessage retMsg)

at System.Runtime.Remoting.Proxies.RealProxy.PrivateInvoke(MessageData& msgData, Int32 type)

at System.ServiceModel.ICommunicationObject.Open()

at ScriptMain.PreExecute()

at Microsoft.SqlServer.Dts.Pipeline.ScriptComponentHost.PreExecute()

If you look at it, this is an authentication error. Tracing the code, it happens AFTER the code successfully retrieves the client certificate from the certificate store. The call to SetServerCertificate succeeds without incident.

The error hits when the code opens the channel, and tries to use the private key attached to the client certificate to prove to the server that “I’m a valid client.”

I went nuts because I was an administrator on the machine, and had installed the client certificate to the certificate store myself. It initially worked, and there was no indication that there was a problem getting the certificate from the cert store.

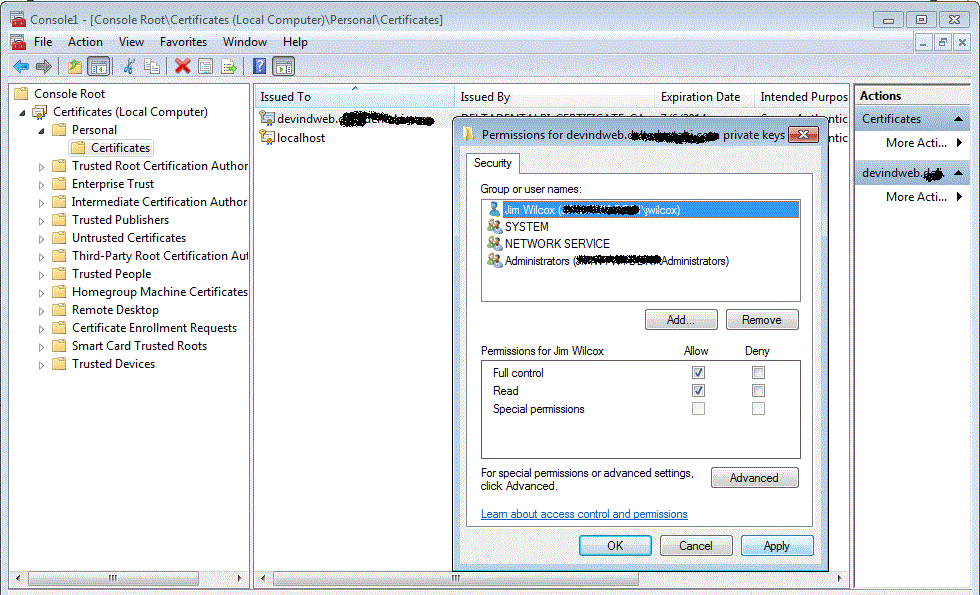

It turns out that when you use the machine store under these circumstances, I needed to give myself explicit permission to the client certificate in order for the SetServerCertificate to get the private key along with the client certificate. This was counter-intuitive for two *additional* reasons: 1) I was an administrator on the box, and already should have had this permission by the fact that my login account belonged to the administrators group (which you can see from the pic below, also had access.) 2) It worked the day before. When I imported the private key originally to the key store, it appears somewhere in the depths of Windows 7 (and this applied on Server 2008 R2 as well) I still had permission in my active session context. When I logged out, that login context died, and, coming back the next day, I logged in again, not realizing I wouldn’t be able to access the key. Giving myself explicit permission as shown below allowed me to run my SSIS package within Visual Studio and from SSMS.